The psychology behind how customer reviews shape online buying decisions

Psychology shows how social proof, cognitive biases, and emotional framing steer your choices online: you rely on reviews to reduce uncertainty, but negative reviews can disproportionately repel you while a stream of positives builds trust; understanding this reveals both the danger of manipulation through fake or incentivized reviews and the positive power of authentic feedback to guide better purchases, so you can make more discerning, evidence-based decisions.

Key Takeaways:

- Social proof drives decisions: high ratings and a large number of positive reviews lower perceived risk and increase conversion.

- Valence and averages steer choices: small differences in star ratings disproportionately affect product selection.

- Quantity and recency matter: many recent reviews signal reliability and relevancy more than a handful of old reviews.

- Content quality influences expectations: specific details, photos, and balanced pros/cons make reviews more persuasive and actionable.

- Source credibility and seller responses shape trust: verified purchasers, reviewer history, and timely responses to negative feedback mitigate concerns and improve purchase likelihood.

Psychological foundations of review influence

Social proof, conformity, and herd behavior

When you see hundreds or thousands of reviews, your brain shortcuts a complex judgment into a simple signal: popularity equals safety. Industry surveys typically report that between 70% and 90% of shoppers consult reviews before buying, and products with large review counts often show markedly higher conversion rates because you assume many buyers can't be wrong. Classic social-psychology findings (Asch-style conformity) map directly onto e-commerce: visible majority opinion nudges you toward the perceived norm, increasing the likelihood you'll follow the crowd rather than evaluate the product fully.

At the same time, herd dynamics create feedback loops: early positive reviews boost visibility, which generates more purchases and more positive reviews - a self-reinforcing cycle that can propel a product to market dominance. You should be aware that this same mechanism is vulnerable to manipulation; coordinated fake reviews or review brigading can manufacture a false sense of consensus and drive buying decisions that wouldn't occur under unbiased information conditions.

Trust formation and source credibility cues

You form trust quickly by scanning a few salient cues: reviewer badges (verified buyer), reviewer history, specificity of comments, recency, and platform endorsements. Short, generic five-star comments carry far less weight than a detailed three-paragraph account from a long-time reviewer with a "verified purchase" tag; in practice, that combination often shifts your trust more than a product’s aggregated star score. Platforms that surface reviewer reputation and verification dramatically change which reviews you accept as credible.

More context sharpens how you interpret those cues: when a review includes concrete details (measurements, usage hours, photos) you tend to treat it as evidence rather than opinion, and that increases persuasiveness in A/B tests firms run on conversion. You also rely on demographic or contextual similarity - for example, if a reviewer describes using the product for the same purpose you have in mind, you weight their opinion more heavily. Verified badges and detailed firsthand accounts are the strongest single signals that convert skepticism into purchase intent.

Cognitive heuristics and biases (availability, negativity, representativeness)

The availability heuristic makes vivid, recent, or emotionally charged reviews disproportionately influential: you remember a dramatic one-star story about a broken battery far more easily than ten bland five-star reports. Negativity bias compounds this effect - negative information typically carries about twice the impact of positive information in your risk assessments, so a single negative review can outweigh several positives when you evaluate safety or reliability.

Representativeness leads you to generalize from a few salient reviews to the whole product: if one reviewer reports a manufacturing defect, you may conclude the product is generally defective even when defect rates are low. This sampling bias is why products often get punished severely by reviewers after isolated incidents; in real-world terms, a handful of extreme complaints can reduce perceived quality and sales disproportionately.

To counter these distortions you tend to seek meta-information: review distributions, median scores, and highlighted pros/cons help you form a more representative judgment. Platforms that show the full distribution of star ratings or algorithmically surface typical (rather than extreme) reviews reduce the chance that one dramatic account will derail your decision-making, because you can see whether an extreme review is an outlier or part of a pattern. Displaying distribution and representative summaries is one of the most effective mitigations against availability and negativity biases.

Characteristics of customer reviews and signals

Quantitative cues: star ratings, aggregates, and summary scores

You scan the average star rating first because it provides an immediate heuristic: a single visible number summarizes quality across many buyers. Studies show that number matters-Luca (2016) found a one-star increase on Yelp was associated with a 5-9% boost in restaurant revenue, and platforms like Amazon or TripAdvisor use that same summary to drive search ranking and click-throughs.

At the same time, you weigh sample size: a 4.4 average with 5,000 reviews typically feels more reliable than a 4.8 with 12 reviews, so platforms incorporate review counts and Bayesian-type smoothing into displayed scores. Aggregates such as percent-recommended or net promoter-style summaries further shift decisions: when you see over 80% recommended across hundreds of reviews, conversion and trust rise even if the mean rating is only moderately high.

Qualitative cues: narrative detail, specificity, and thematic content

You look for concrete details-battery life in hours, exact dimensions, model compatibility, or a mention of the shipping experience-because specificity reduces uncertainty. Longer reviews that name attributes and outcomes (e.g., “lasts 9 hours on a full charge,” “fits size M with 2 cm room”) are consistently rated as more helpful by other users and by platform algorithms that surface “most helpful” feedback.

Themes within narratives shape how you interpret numeric scores: frequent mentions of the same topic (shipping, durability, odor, customer service) create an implicit weighting. For instance, if multiple reviewers cite “battery swelling” or “customer-service refund” you’re more likely to filter the product out even with a decent star average.

Two-sided narratives-where reviewers include both pros and cons or describe a fault plus how the seller fixed it-are particularly persuasive because they signal balanced observation rather than scripted promotion; you will often trust a 3-4 sentence review that names a fix or workaround more than a one-line 5-star cheer.

Verification and credibility signals: verified purchase, reviewer history, multimedia

You give more weight to badges and provenance: a “Verified Purchase” tag, a reviewer with hundreds of prior useful votes, or a profile labeled “Top Contributor” raises the posterior probability that the review reflects a real transaction. Platforms surface these markers because they measurably increase perceived trust and reduce perceived manipulation.

Multimedia inside reviews-photos and short videos showing the product in use-short-circuit doubt by supplying observable evidence. When you can see stitching, color under natural light, or how a device functions, you’re less likely to be swayed by extreme text-only reviews; many sellers report uplift in conversion and fewer returns when user images are present in review galleries.

Pay attention to timing and reviewer patterns as red flags: a sudden cluster of five-star reviews in 24-48 hours or numerous one-off reviewers with identical phrasing signals potential inauthenticity, while a reviewer with a long history of varied ratings and detailed comments signals reliability; both types of signals materially change how you should weight the review ensemble.

Cognitive and affective mechanisms

Persuasion principles in reviews: authority, liking, reciprocity, scarcity

You respond strongly to cues of authority in reviews: badges like "Verified Purchase", expert bylines, or influencer endorsements serve as mental shortcuts that boost trust and shorten decision time. Studies of e-commerce behavior repeatedly show that adding authoritative signals can lift conversion rates; for example, platforms that surface expert reviews or certified-user tags often report double-digit increases in click-throughs on product pages. At the same time, social liking-such as reviews that mention relatable experiences or display photos of everyday users-makes you more likely to identify with the reviewer and adopt their recommendation.

Reciprocity and scarcity operate as behavioral accelerants in review contexts. If you see a brand responding personally to reviewers or offering small gestures (samples, coupons) in exchange for feedback, you feel a social pressure to reciprocate with a favorable perception or purchase. Scarcity signals embedded in reviews or review-aggregated displays-phrases like "rare find" or "only a few left" combined with high ratings-create urgency that can push you from browsing to buying within minutes. Be aware that these cues can be used ethically to guide decisions or manipulatively to create undue urgency.

Emotional processes: sentiment, affective forecasting, and emotional contagion

Sentiment in reviews isn't just positive or negative; the intensity and specificity of emotional language shapes how you predict your own future feelings about a purchase. For instance, a hotel review that emphasizes "slept like a baby" or "staff saved our anniversary" generates vivid affective forecasts, making you imagine a similar emotional outcome. Sentiment analysis tools quantify valence and arousal, and platforms that surface aggregated sentiment scores (star averages plus comment tone) translate those signals into faster purchase decisions-research on review-driven categories like electronics and travel shows that sentiment-weighted summaries correlate with higher conversion rates than raw star averages alone.

Emotional contagion means you pick up mood from reviews: high-arousal positive reviews (excited language, vivid sensory detail) tend to increase your enthusiasm, while angrier or disgust-laden reviews amplify avoidance. Social platforms and marketplaces amplify this effect by highlighting "most helpful" emotionally charged reviews, so a handful of vivid posts can sway thousands. Negative reviews often carry extra weight because negative emotions are more memorable and motivating, so a single vivid complaint can cut sales more than several bland positives can raise them.

More granularly, you respond differently to discrete emotions: joy and surprise drive exploratory purchases and sharing, whereas fear and disgust trigger deep information-search and reduced willingness to pay. Text features that measure emotional concreteness-sensory words, proper nouns, chronology-predict downstream behaviors like add-to-cart and time-on-page, meaning review designers who encourage detailed storytelling systematically shape buyers' emotional expectations and choices.

Memory, priming, anchoring, and framing effects

Initial exposure to a review or a highlighted rating creates an anchor that colors subsequent evaluations: the first star-rating or price you see sets a reference point for what counts as "good value," and later information is interpreted relative to that anchor. Priming operates similarly; if your feed surfaces reviews emphasizing durability first, you'll scan product specs with durability in mind and discount other attributes. Memory processes such as the peak-end rule change how you recall past reviews-extreme comments and the most recent posts disproportionately shape your retrospective judgment of a product.

Framing in reviews alters perceived trade-offs: a reviewer who frames a 20% slower processor as "longer battery life for traveling" converts a technical weakness into a benefit for a specific use case. You will often favor products whose reviews frame outcomes in gains rather than losses, and platforms that present aggregated pros/cons can shift your risk calculus. Because people exhibit loss aversion, reviews that highlight potential losses (e.g., "lost connection on three trips") typically have stronger behavioral effects than equivalent gain-focused statements.

Digging deeper, frequency and recency interact with framing to determine what you remember: repeated mention of the same flaw across multiple recent reviews forms a stronger memory trace and becomes a heuristic you use to reject options quickly. For practical application, curating recent, balanced highlights and surfacing exemplar narratives that reframe common negatives into context-specific trade-offs reduces the salience of singular negative memories and improves the accuracy of your final judgment.

How reviews shape each stage of the buying journey

Awareness and search: salience, ranking, and attention capture

You often encounter reviews before you see product details: star ratings and review counts appear in search snippets and marketplaces, making some listings *stand out* in a crowded results page. Search algorithms and marketplace rankings use review signals-average rating and review volume-to boost visibility; Spiegel Research Center found that products with five or more reviews are up to 270% more likely to be purchased than products with none, which explains why high-review listings dominate top results.

When you scan results, visual cues matter: listings with star rich snippets and recent photo reviews capture attention and increase click-through rates by as much as 20-35% in A/B tests. You’ll click more often on results that combine a strong average rating with a large, recent review set because those cues reduce your initial uncertainty and make the listing feel more salient among similar options.

Evaluation and comparison: attribute weighting and trade-offs

During comparison, you let review content reweight attributes: if most reviewers mention battery life, portability, or customer service, those features become more important in your internal trade-off calculus. You rely on frequency and specificity-statements like “battery lasted 10 hours” or photos of real use push performance attributes higher in your priority list, sometimes outweighing brand reputation or specifications on the product page.

Negative reviews shift attention asymmetrically: you’ll overweight rare but severe complaints (e.g., safety or reliability issues) more than you discount sporadic praise, because negativity bias makes potential losses more salient than equivalent gains. That’s why a product with a 4.2 average and two documented safety or failure incidents can feel riskier to you than a 3.9-rated competitor with only minor complaints.

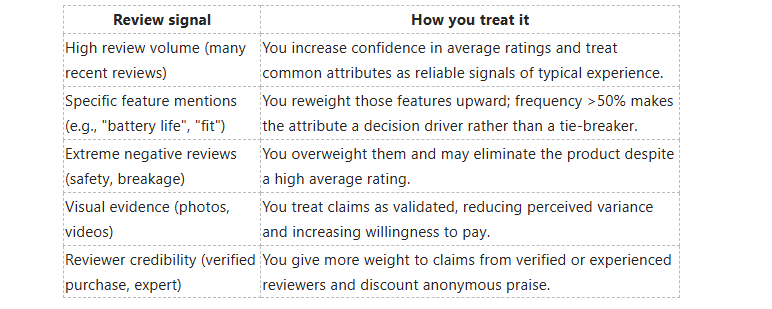

To see how specific review signals map to how you adjust attribute importance:

How review signals change your attribute weighting

Purchase conversion: trust, perceived risk reduction, and price sensitivity

You convert when reviews remove enough uncertainty to justify the spend: trust-building elements-verified purchase badges, dated reviews, and photos of actual use-reduce perceived risk and shorten your decision process. Platforms that surface replies from sellers and clear return policies in reviews make you more likely to follow through; conversions often rise markedly when you see responsiveness and documented problem resolution in the review stream.

Price sensitivity shifts based on value signals in reviews: if reviewers repeatedly praise durability or total cost of ownership, you’re willing to pay a premium; conversely, recurring comments about poor value or hidden costs make you seek cheaper alternatives or wait for discounts. Marketplace experiments show that strong social proof can offset price gaps of 10-20% in many categories, meaning you’ll accept higher prices when reviews deliver convincing evidence of superior outcomes.

More detail on how review content modifies your trust and price calculus: you look for patterns (recurring praise or repeated complaints), verify recency (past 6-12 months matters more in fast-moving categories), and use seller responses as a proxy for post-purchase support-each of these reduces perceived risk and raises your likelihood of completing the purchase.

Platform design, algorithmic curation, and moderation

Presentation, sorting, highlights, and summary design effects

Interface choices shape how you weigh reviews: showing an average rating as 4.5 instead of 4.3, surfacing a single "Top review" with a bold excerpt, or displaying only star tallies without text all change perceived quality. When platforms let you sort by "most helpful" versus "most recent," the same product can look dramatically different-behavioral research and A/B tests routinely find that changing default sort or the visibility of a highlighted review can shift click-through and conversion rates by measurable amounts (commonly in the low double digits). You respond more strongly to short, bolded snippets and badges like "Verified purchase", which boost trust even if they mask nuance in long-form reviews.

Summaries and microcopy amplify frame effects. Aggregated sentiment bars, color-coded star strips, and algorithmic "review highlights" reduce cognitive load for you but also compress complex trade-offs into a few signals; for example, an at-a-glance summary that emphasizes five-star percentages will downplay recurring quality complaints that appear only in full-text reviews. Design choices that hide negative reviews behind accordions or collapse long negative threads can inflate your confidence in a product because you encounter fewer counterexamples during rapid browsing.

Algorithms, personalization, fake-review detection, and moderation policies

Personalization tailors review exposure to your profile: platforms use your past purchases, geography, and browsing history to prioritize reviews from reviewers similar to you, increasing relevance but also creating echo chambers where you see feedback that affirms prior preferences. Recommendation and ranking models typically combine signals like helpful votes, recency, and reviewer reputation; as a result, a review with many "helpful" clicks or a history of verified purchases will reach you sooner and shape your judgment disproportionately. That amplification is often positive-personalized surfacing raises the signal-to-noise ratio-but it can also lock you into a narrow perspective if diverse viewpoints are deprioritized.

Platforms deploy automated and manual moderation to fight fraud, using machine learning classifiers, network analysis, and user-reporting workflows to detect suspicious behavior. You should be aware that detection systems rely on patterns such as bursts of reviews, reused text, or clustered reviewer accounts; major platforms have publicly removed large volumes during sweeps, and marketplaces report that these interventions prevent widespread manipulation. However, moderation thresholds and policies vary: aggressive filtering reduces fake reviews but can mistakenly suppress legitimate negative feedback, while lax policies leave you exposed to paid or coordinated promotional reviews.

Detection techniques combine metadata and textual signals: behavioral features (timing, review frequency, IP/device overlaps), linguistic markers (unusual repetition, lack of concrete detail), and graph methods that reveal reviewer-reviewer collusion. Machine-learning systems balance precision and recall, which means platforms often tune models to avoid false removals even if that allows some fraudulent content to persist; in practice this trade-off shapes what you see, so understanding the provenance of a review-verified purchase badges, reviewer history, and cross-platform corroboration-remains one of the best ways you can evaluate authenticity.

Practical implications for businesses and marketers

Managing review ecosystems: solicitation, response strategies, and product feedback loops

When you solicit reviews, time the request to match typical product experience-generally send the first request around 3-7 days after delivery for fast-moving consumer goods and 7-14 days for longer-use items. Use a single-click, mobile-first CTA and limit follow-ups to 1-2 reminders; depending on product type and channel you should expect roughly 5-20% conversion from post-purchase requests to submitted reviews. Respond publicly to both positive and negative reviews and then move complex issues offline; responding within 24-48 hours signals care and reduces escalation, while a consistent public-response program correlates with better perceptions of trust-a one-star increase on Yelp has been associated with a 5-9% revenue uplift (Michael Luca). Do not offer incentives or manipulate review submissions on platforms that forbid it-violating platform policies can lead to account penalties or delisting, so map solicitation tactics to each platform’s terms and audit compliance quarterly.

Build a closed-loop feedback pipeline by automatically tagging review themes (quality, shipping, usability) and surfacing the top 3-5 issues to product and operations teams weekly; prioritize fixes that appear in more than a few percent of reviews or that tie to safety/regulatory concerns. Instrument dashboards that show review velocity, average rating by cohort, and post-resolution outcomes, and run A/B tests that compare product pages with different review excerpts and badge placements-research shows conversion benefits rise sharply as review counts accumulate, with meaningful gains once you reach the 5-50 review range. Tie remediation KPIs to commercial metrics (rating lift, return-rate reduction, and repeat-purchase lift) and aim to convert a measurable share of resolved complaints into updated reviews to close the loop between customer voice and product improvements.

To wrap up

Following this, customer reviews function as powerful social proof and mental shortcuts that shape how you evaluate products online: aggregated star ratings simplify decisions, consensus increases perceived reliability, and vivid anecdotes reduce your sense of uncertainty. Psychological biases - such as negativity bias, conformity, and the availability heuristic - influence how you weigh individual comments, while reviewer credibility, review recency, and the presence of detailed evidence determine how much trust you place in what you read.

You should treat reviews as structured signals rather than definitive answers: prioritize specific, verifiable details, scan reviewer histories for authenticity, balance ratings distribution against substantive critiques, and be alert for repetitive phrasing or timing patterns that suggest manipulation. Use reviews to reduce perceived risk and narrow options, then confirm critical claims through product specifications or independent sources before completing a purchase.

FAQ

Q: How do customer reviews act as social proof and influence purchase decisions?

A: Reviews serve as social proof by signaling what others have done and liked; people use them to reduce uncertainty and follow perceived norms. When shoppers face many options or limited product knowledge they rely on collective feedback to infer quality, safety, and popularity. Visible metrics like total review count and average rating create a sense of consensus that increases perceived reliability and can speed decision-making through conformity and heuristic shortcuts.

Q: How do star ratings and review valence shape willingness to buy?

A: Star ratings provide a quick heuristic: higher averages increase purchase intent while lower averages raise perceived risk. Valence asymmetry means negative reviews typically weigh more than positive ones because losses loom larger than gains; a single detailed negative review can disproportionately lower intent. Consumers also consider the distribution (many 4-star vs few 5-star) and set thresholds (e.g., preferring 4+ stars), so both average and variance influence conversions.

Q: What characteristics of individual reviews make them more persuasive and trustworthy?

A: Persuasive reviews are specific, detailed, and include concrete examples of use, outcomes, or comparisons. Verified-purchase markers, reviewer profiles, photos or videos, balanced pros-and-cons, and recent timestamps increase credibility. Language that is precise rather than hyperbolic, mentions of context (how and when the product was used), and consistency across multiple reviews all boost diagnostic value and trustworthiness.

Q: How do negative reviews affect buying behavior and what response strategies work best?

A: Negative reviews elevate perceived risk and draw attention to potential failure modes, which can deter purchases-especially when complaints are about core product functions or safety. However, a small number of honest negatives can increase overall credibility. Effective business responses are prompt, empathetic, solution-focused, and public: acknowledge the issue, offer remediation, and show steps taken to fix systemic problems. Transparent replies and evidence of resolution often restore trust and limit long-term damage.

Q: How do review recency, volume, and source credibility interact to influence online buyers?

A: Recency signals current product quality and relevance; recent positive reviews counter older complaints and boost confidence. Volume reduces uncertainty by providing more data points-large sample sizes make averages more reliable. Source credibility matters: verified buyers, experts, or known influencers carry greater weight than anonymous accounts. Shoppers integrate these cues-recent, numerous, and credible reviews produce the strongest persuasive effect.