From click to checkout : how consumer reviews drive purchasing behavior

Over the past decade, consumer reviews have reshaped how you shop online, turning browsing into buying by amplifying social proof and highlighting both product strengths and risks. When reviews emphasize quality and value, your confidence and conversion likelihood rise; when they surface safety concerns or hidden defects, you often abandon a purchase. Savvy brands leverage reviews to build trust and higher sales, making feedback a decisive force on your buying journey.

Key Takeaways:

- Customer reviews boost conversion by building trust and reducing purchase anxiety.

- Higher review volume and recent reviews increase purchase likelihood and visibility in search.

- Star ratings and sentiment drive click‑throughs; balanced negative feedback can enhance credibility.

- Authentic user‑generated content provides social proof and shortens the path from discovery to checkout.

- Reviews improve SEO and power rich snippets, driving more organic traffic to product pages.

The Review Ecosystem: Platforms, Formats, and Actors

Types of platforms: marketplaces, brand sites, third‑party aggregators

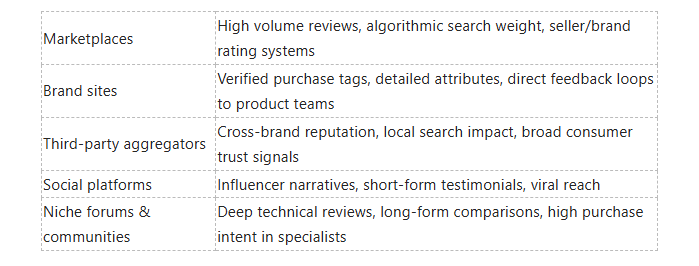

You encounter three dominant platform types that shape how reviews influence purchase decisions: large marketplaces (Amazon, eBay) that prioritize volume and search relevance, direct brand sites where reviews tie to first‑party data and CRM, and third‑party aggregators (Trustpilot, Google Reviews, Yelp) that serve cross‑brand discovery and reputation signals. Marketplaces often surface reviews in search snippets and filter results by rating, brand sites let you collect structured attributes (fit, usage, problems) and aggregators provide visibility across channels and for local intent.

- Marketplaces: high traffic, algorithmic ranking, emphasis on review count and recency.

- Brand sites: controlled presentation, richer follow-up (surveys, support), stronger data ownership.

- Aggregators: cross‑site credibility, SEO value, influence on discovery outside product pages.

Any platform choice shapes how your product is discovered, which trust signals matter most, and the conversion mechanics you must optimize.

Review formats and metadata: star ratings, text, images, video, Q&A

Star ratings provide an at‑a‑glance heuristic: many shoppers filter to 4+ stars, so your average rating and distribution matter more than a single mean. Text reviews supply qualitative context-useful phrases, pain points and feature requests-that your product team can action. Visuals and video reviews close the expectation gap: consumer tests and industry reports often show that listings with user images or short videos reduce return rates and lift conversion by noticeable margins in categories like apparel and electronics. Q&A threads act as evergreen micro‑content that answers common objections and reduces pre‑purchase friction.

Metadata such as verified purchase badges, timestamps, reviewer location, and product attributes (size, color, use case) enable segmentation and automated signals (e.g., highlight recent negative trends). You can use structured metadata to feed machine learning models for sentiment trends, detect early defects, and prioritize responses when velocity spikes.

Stakeholders and roles: consumers, reviewers, brands, platforms, moderators

Consumers use reviews for both validation and social proof; they scan ratings, seek specific phrases, and weigh recent experiences more heavily. Reviewers range from casual one‑time posters to power reviewers who influence niche audiences; identifying and engaging top contributors can amplify authentic content. Brands are responsible for monitoring sentiment, responding to complaints, and integrating review insights into product roadmaps-companies that act on review feedback typically shorten issue resolution cycles and reduce churn.

Platforms serve dual roles: they host content and enforce trust mechanisms (verification, fraud detection) while moderators-both human and algorithmic-balance removal of abusive/fake content against preserving authentic negative feedback. You should track metrics like review velocity, authenticity signals, and response times because these operational levers directly affect perceived trust and conversion outcomes.

Psychological and Behavioral Mechanisms

Social proof, herd behavior, and normative influence

You see collective endorsement as a shortcut: when dozens or hundreds of people rate a product highly, you infer it must be good. Research from the Spiegel Research Center shows that the presence of reviews dramatically raises purchase likelihood-products with reviews can be up to 270% more likely to be bought than those without, which explains why marketplaces prioritize review visibility. In practice, you’ll notice conversion lifts most sharply once a product crosses small thresholds (for example, moving from zero to five reviews), because that early volume signals that buying the item is a socially accepted choice.

Social proof operates at both conscious and automatic levels: star averages and review counts give you quick normative information, while highlighted comments and photos let you adopt specific social scripts ("others like me used this for X"). That creates a bandwagon effect where visible popularity begets more popularity, and it can also produce herd behavior in time-limited contexts (flash sales, trending lists). Positive review volume tends to increase conversion and perceived safety, while sudden surges or overwhelmingly uniform praise can trigger skepticism and regulatory flags for fake reviews.

Trust, credibility cues, and source evaluation

You rely on heuristics to evaluate reviewer credibility because you can’t verify every claim. Elements such as verified-purchase badges, reviewer history, recency, photos or videos, and the presence of balanced pros-and-cons are the fastest signals you use to decide whether a review is credible. Platforms that surface these cues-Amazon’s verified tag, Google’s reviewer profile links, or Trustpilot’s trust badges-drive higher engagement because these signals reduce your perceived risk and shorten decision time.

When assessing reviews, you tend to weight negative information more heavily: research across consumer behavior studies indicates that negative reviews have outsized influence, often being perceived as 2-3 times more diagnostic than positive ones. To evaluate authenticity, you check for specifics (dates, use cases, photos), repetitive phrasing across accounts, and whether the reviewer has a history of balanced feedback; your ability to spot these cues directly affects whether review content moves you from interest to purchase.

Emotional resonance, narrative persuasion, and memory effects

Your decisions are strongly shaped by emotion and story framing in reviews. Narrative reviews that describe a problem-solution arc-how the product solved a pain point or improved daily life-create higher persuasion than dry lists of features; platforms such as Airbnb and TripAdvisor routinely show that story-rich reviews increase bookings and longer-term loyalty. Emotional detail makes reviews more memorable and more likely to be shared, so a handful of vivid anecdotes can drive outsized behavioral impact.

Emotion also interacts with memory consolidation: heightened arousal at the time you read a review makes that review more retrievable later when you’re comparing options. That’s why you respond more to vivid testimonials and sensory descriptions than to aggregate statistics alone. Encouraging reviewers to include concise storytelling elements-specific outcomes, timelines, and sensory cues-amplifies the persuasive power of reviews and increases the likelihood you’ll recall them at checkout.

Review Signals Across the Purchase Funnel

Discovery and ranking: SEO, internal search, and algorithmic boosts

You want reviews to feed both external and internal discovery: implement product review schema so search engines can surface star ratings and review counts in snippets, which often lifts organic CTR by noticeable margins. On your site, index review text and metadata (pros/cons, use cases, location) so internal search and faceted navigation can match queries like "durable hiking boots" or "sensitive-skin moisturizer."

Algorithms reward signals beyond the average rating: velocity (how quickly reviews accumulate), diversity of keywords inside reviews, and the presence of photos or videos. In practice, surfacing review-derived badges (e.g., "Top Reviewed," "Trending") in listing pages can increase clicks; one A/B test for a mid-size retailer showed a 27% lift in click-through after adding review-rich snippets and trending badges to category pages.

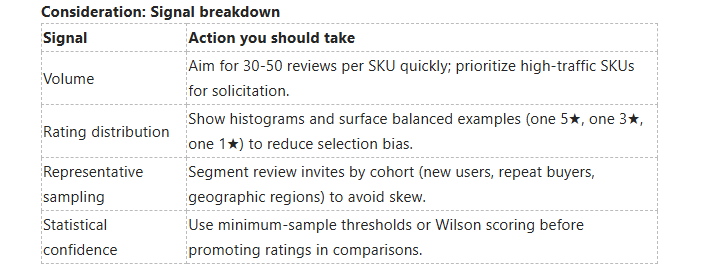

Consideration and comparison: volume, distribution, and representative sampling

When customers compare options, they read both the average rating and the distribution. You should show a histogram so buyers can see whether a 4.3 average is built on mostly 5s and 1s or a tight cluster of 4s. For many categories, moving from single-digit reviews to 30-50 reviews materially increases perceived reliability; beyond ~100 reviews conversion gains start to plateau for fast-moving SKUs.

Distribution matters more than a single mean: highlight how many 5-star vs 1-star reviews there are, show representative reviews for common use cases, and ensure sampling covers different buyer segments (age, use intensity, geography). Use verified-purchase flags and ask for targeted feedback after 7-30 days to capture early-use issues versus long-term durability.

You should apply simple statistical guards so you don't surface noisy signals: for small N, display a confidence band or hide the aggregate rating until a threshold is met, and consider Wilson score or Bayesian shrinkage to rank products - these methods reduce the chance that a handful of extreme reviews distort buyer perception.

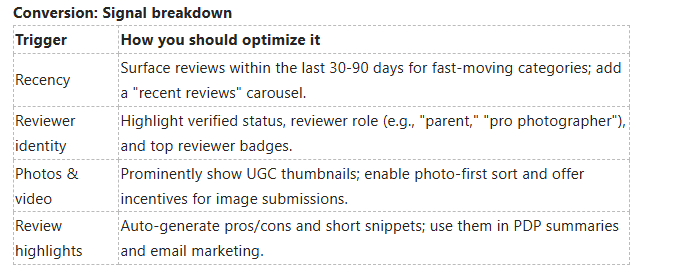

Conversion triggers: recency, reviewer identity, photos, and review highlights

Recency is a top conversion lever: buyers often ignore ratings older than 12 months for fast-evolving categories (electronics, skincare). Make recent positive reviews and "verified purchaser" badges prominent; show reviewer context (age, use-case, verified-buyer status) so the shopper can judge fit. Photos and short video clips increase trust quickly - in apparel and beauty, UGC images can lift conversion by double-digit percentages when displayed next to product photos.

Review highlights and structured pros/cons act as micro-conversion copy: extract common phrases ("no break-in required," "left-side pocket missing") and surface them as bullets on the PDP. Allow filtering by reviews with photos or by user attributes so someone can see "5 reviews from hikers" or "3 photos from people over 6'." That matching reduces friction at the moment of purchase.

Mitigate risk by moderating for authenticity and flagging suspicious patterns; at the same time surface the most context-rich reviews first so your shoppers see real-world evidence (photos, use-case notes, verified badges) that directly answers their purchase questions.

Measuring Impact: Analytics and Experimental Design

Core KPIs: conversion rate, average order value, retention, return rates

You should track how review exposure changes baseline conversion at multiple points in the funnel - product detail page (PDP) conversion, add-to-cart conversion, and checkout completion. Empirical results from multiple retailers show that displaying robust review content can drive meaningful lifts; for many categories you can expect conversion lifts in the range of 10-30% when reviews are prominent and trusted, while poorer or missing review signals often depress conversion by a similar magnitude. Segment by star band, review count, and review recency because a product with a 4.8 average from 200 reviews behaves very differently than a 4.2 average from 5 reviews.

Your AOV, retention, and return-rate metrics will usually move in concert but not identically: highlighting product comparisons and high-quality reviews often increases AOV by 5-15% via upsell or cross-sell, and clear product expectations from detailed reviews can reduce return rates by 5-15%. Instrument event-level metrics - review impressions, review clicks/expands, helpful votes, and verified-purchase flags - and tie those to user cohorts to see how review engagement translates into repeat purchase probability and retention over 30/90/180 days.

Attribution and multi‑touch modeling for review influence

Don’t rely solely on last-click; you need multi-touch approaches because reviews frequently act as mid-funnel validation or late-stage reassurance. Implement time-decay and position-aware weighting, then compare rule-based splits (first-touch, last-touch, linear) with algorithmic methods such as Shapley value attribution or Markov-chain removal analysis. In practice, brands that apply Shapley or Markov approaches often find that 20-40% of the attributable conversion effect aligns with review exposures rather than advertising or search alone.

Operationally, treat review events as first-class touches in your attribution data model: log impressions, expansions, and interactions with unique user-session IDs, then stitch sessions across devices for a 7-30 day exposure window. Use hashed identifiers and probabilistic matching for anonymous users, and validate models by running holdout experiments where you suppress review impressions for a test cohort to observe the counterfactual conversion behavior - that gives you a sanity check against inflated algorithmic attributions.

For implementation detail, compute Shapley contributions via Monte Carlo sampling across touchpoint permutations or run Markov chain absorbing-state analysis where you remove the “review” node and measure the drop in conversion probability; if conversion falls from 3.0% to 2.4% when you remove review impressions, the marginal attribution of review exposure is 20% for that path. Also, tune your exposure window (7, 14, 30 days) by product type - long‑consideration categories need longer windows to capture review influence.

A/B testing, uplift measurement, and causal inference for review features

You should design randomized experiments for any feature that changes review visibility or presentation: show vs hide reviews, different summary snippets, verified badges, review sorting defaults, or highlighting expert reviews. Measure immediate conversion, AOV, and return rates during the test, and extend measurement to 30-90 days to capture retention and return behavior. As a rule of thumb, if your baseline conversion is ~2-3% and you want to detect a 10% relative lift, plan for tens to hundreds of thousands of visitors per arm depending on variance - small-product categories require larger samples.

When randomization isn’t possible, apply uplift modeling and causal methods: causal forests, propensity-score stratification, difference-in-differences for phased rollouts, or instrumental variables when exposure is endogenous. For large retailers, a recommended pattern is to use randomized feature flags for a subset of users (10-20% holdout for long-term LTV measurement) and complement that with causal-modeling on the observational remainder to estimate heterogeneous treatment effects by product, user tenure, and traffic source. That hybrid approach helps you quantify short-term conversion uplift and long-term lifetime value impact together.

Watch for interference and contamination: if reviewers or users see multiple variants across devices, your estimated uplift will bias toward zero. Use cluster randomization by account or household where applicable, implement Bayesian sequential testing to stop early without inflating false positives, and always validate short-term lifts against post-experiment LTV and return-rate windows to avoid chasing novelty effects. Strong guardrails such as pre-specified primary metrics and holdout cohorts protect you from misattributing ephemeral gains.

Operationalizing Reviews: Strategy and Best Practices

Soliciting authentic reviews: timing, incentives, and post‑purchase flows

You should time review requests to match real product use: for consumables and fast-feedback items, send the first request between 3-7 days after delivery; for durables or items requiring setup, wait 14-30 days so reviewers can give informed feedback. Typical post‑purchase email review response rates sit around 2-10%, but by using progressive prompts (star rating first, then open text), one‑click review links, and a follow-up reminder you can push that into the 15-20% range on optimized flows. Include SMS or in‑app nudges when appropriate, and always surface the specific product variant and order details to reduce friction.

Incentives increase response volumes but must be structured to preserve authenticity: offer a neutral reward (e.g., 5-10% off next purchase, 50-200 loyalty points, or entry into a monthly sweepstakes) and disclose the incentive clearly to comply with FTC and platform policies. Avoid any program that conditions the reward on positive language; instead run targeted sampling (similar to Amazon Vine) or invitation-only review programs for early feedback and richer media. Finally, tag submissions as “verified purchase” where possible and surface that badge prominently to reduce suspicion and fraud.

Presentation and UX: summary signals, filtering, sorting, and trust badges

Place summary signals where they influence the conversion funnel: show the average rating, total review count, and a recency indicator near the primary CTA - for example, “4.6 • 3,212 reviews • latest 3 days ago” - because evidence from the Spiegel Research Center shows products with reviews are significantly more likely to be purchased (one study found a large uplift versus products without reviews). Use a visual rating distribution (bars for 5→1 stars) and surface a quick sentiment snapshot (percentage positive/negative). Implement schema.org review markup so your stars and counts can appear in search results; structured review snippets have been shown in tests to lift organic CTR by double‑digit percentages.

Give users robust filtering and sorting: allow filters for star rating, “verified purchase”, reviews with photos/videos, and keyword search within reviews, and offer sorting options like most helpful, most recent, and by relevance to the shopper’s query. Prioritize mobile-first interactions - collapse long reviews behind “read more”, lazy‑load media, and keep the top three reviews server‑rendered for SEO and performance. Add trust badges (verified purchase, third‑party moderation, and photo verified) next to reviewer names to increase perceived credibility, and surface manufacturer or brand responses inline to show active moderation and resolution.

Operationally, A/B test which summary signals drive the highest conversion for each category (stars + count vs. sentiment percent vs. top pros/cons) and track metrics like review velocity, coverage (percentage of SKUs with ≥1 review, ≥5 reviews, ≥50 reviews), conversion lift, and helpful‑vote rates. Ensure your review component records timestamps, reviewer authenticity flags, and media presence so you can segment performance (for example, products with ≥10 photo reviews often convert 20-35% better). Make the moderation policy transparent and include an appeals path; transparent moderation and a visible verified‑purchase badge are among the most positive trust levers you can deploy.

Risks, Fraud Prevention, and Regulatory Landscape

Detecting and mitigating fake reviews; platform policies and enforcement

You should expect platforms to combine behavioral signals and content analysis to flag inauthentic reviews: common inputs include purchase verification status, reviewer history and diversity, IP and device fingerprints, temporal burst patterns (many reviews for one SKU within hours), and text-similarity or template detection across reviews. Companies like Amazon add a "Verified Purchase" badge to prioritize reviews tied to confirmed transactions, while third-party auditors such as Fakespot and ReviewMeta have repeatedly flagged that in some product categories up to ~30% of reviews appear suspicious. Machine-learning classifiers trained on these features-along with network analysis that maps seller-reviewer relationships-can detect coordinated campaigns with high precision, but you should be aware that attackers continually adapt with rotated accounts and human-writings to evade automated filters.

Enforcement mixes automated removal with manual investigation and legal action: platforms routinely delete reviews, suspend or ban accounts, and remove sellers from marketplaces; in more aggressive cases they pursue civil suits against review brokers or coordinated networks. Regulators also play a role-under the U.S. Federal Trade Commission's endorsement rules, undisclosed paid endorsements are actionable and can lead to penalties and refund orders-so platforms and brands now face legal risk, account suspension, and reputational damage if they tolerate manipulation. To protect your buying and selling activities, use platform signals (like verified purchase indicators), audit suspicious clusters yourself, and favor marketplaces that publish transparency reports and proactively disclose enforcement metrics.

Final Words

Ultimately you depend on consumer reviews to convert curiosity into purchase decisions: authentic ratings and detailed testimonials provide the social proof that reduces perceived risk, increases click-throughs, and lifts conversion rates across channels. By prioritizing verified feedback and surfacing the most relevant comments, you make it easier for shoppers to trust your product and move from click to checkout.

To capitalize on that influence, you should amplify positive experiences, address negative reviews promptly to demonstrate responsiveness, and use review insights to refine product pages, pricing, and targeting. When you treat reviews as strategic assets rather than passive content, your marketing and product teams gain a continuous feedback loop that improves user experience and drives measurable sales growth.

FAQ

Q: How do consumer reviews influence conversion rates from click to checkout?

A: Reviews reduce uncertainty by providing social proof and first-hand experiences, which increases trust and shortens decision time. High average ratings and a substantial number of reviews typically lift conversion rates, while detailed reviews with images or usage context address common objections and lower return rates. Displaying recent, verified reviews at the point of decision and highlighting helpful excerpts in product summaries can move shoppers from browsing to buying more quickly.

Q: Which review elements matter most to shoppers when deciding whether to purchase?

A: Shoppers prioritize average star rating, total number of reviews, recency, and authenticity signals such as verified-purchase labels and reviewer profiles. Specifics in review text-measurements, fit, durability, and real-world use cases-carry more weight than generic praise. Visuals (user photos or videos), helpfulness votes, and responses from the brand further increase credibility and influence purchase intent.

Q: How should merchants integrate reviews into the checkout funnel to maximize impact?

A: Place review summaries and top helpful quotes on category pages, product pages, and near the add-to-cart button to reinforce confidence before checkout. Use snippets in cart reminders, exit-intent overlays, and post-click landing pages to reduce abandonment. Implement structured data for review snippets in search results, A/B test placement and language of excerpts, and collect post-purchase reviews to keep content fresh and relevant.

Q: What is the best way to handle negative reviews so they don't harm sales?

A: Respond quickly and professionally, acknowledge the issue, offer a solution or next step, and follow up privately when needed; public resolution signals responsiveness and can restore trust. Use negative feedback to identify product or process improvements and publish transparent updates when fixes are implemented. Leaving legitimate negative reviews visible alongside responses often increases perceived authenticity rather than reducing conversions.

Q: How do reviews interact with SEO and paid advertising to drive both traffic and purchases?

A: User-generated reviews create fresh, long-tail content that improves organic rankings and helps capture niche queries, while structured review markup can produce rich snippets that boost CTR in search. Reviews used in ad creative and landing pages add social proof that increases ad relevance and click-to-conversion ratios. Analyze sentiment and keyword patterns in reviews to optimize product descriptions, ad copy, and targeting for better performance across channels.