Data-driven insights : how to analyze and predict online consumer trends

Les données vous guident dans l'analyse du comportement de votre audience et la prévision de la demande. Cet article propose des méthodes pratiques pour transformer les signaux bruts en informations exploitables grâce à la segmentation, aux modèles de séries temporelles et aux tests A/B. Vous apprendrez à évaluer la qualité des données en tenant compte des risques liés à la confidentialité et des biais, à établir des processus fiables et à déployer des modèles prédictifs de l'intention d'achat. Appliquez ces techniques pour optimiser vos campagnes et augmenter vos conversions, tout en garantissant une rigueur éthique et technique.

Points clés à retenir :

- Intégrer des sources quantitatives et qualitatives (données transactionnelles, analyses web, écoute des médias sociaux et enquêtes) afin de dresser un tableau complet du comportement des consommateurs en ligne.

- Segmentez les utilisateurs et effectuez une analyse de cohorte pour identifier les tendances du cycle de vie, les facteurs de fidélisation et les groupes de clients à forte valeur ajoutée afin de mettre en place des stratégies ciblées.

- Tirer partie des prévisions de séries temporelles et de l'apprentissage automatique (par exemple, le gradient boosting, LSTM) ainsi que de l'inférence causale pour prédire les tendances et tester les facteurs de changement.

- Assurez une hygiène des données et une ingénierie des fonctionnalités rigoureuses, puis validez les modèles par des tests A/B et des tests rétrospectifs afin de maintenir des prédictions fiables.

- Opérationnalisez les informations grâce à des tableaux de bord, des alertes automatisées et des moteurs de personnalisation qui correspondent directement aux indicateurs clés de performance (KPI) de l'entreprise et aux actions tactiques.

Comprendre le comportement des consommateurs en ligne

Définition du comportement du consommateur en ligne

L'analyse du comportement en ligne permet de suivre le parcours des individus, de la produit d'un produit à l'achat, sur différents canaux : recherche, réseaux sociaux, places de marché et sites web. Les points de contact typiques incluent les requêtes de recherche organique, les publicités payantes, les pages produits, les avis et les interactions post-achat. L'ensemble de ces éléments constitue un parcours client mesurable , que vous pouvez cartographier à l'aide de données de session, d'entonnoirs de conversion et de modèles d'attribution.

Concrètement, vous segmenterez ce parcours client (type d'appareil, canal d'acquisition, zone géographique et valeur vie client) afin d'identifier les facteurs clés de conversion. Par exemple, les utilisateurs mobiles présentent souvent des taux de rebond plus élevés, mais des paniers moyens plus faibles. Optimiser l'expérience mobile et fluidifier le processus de paiement est donc essentiel pour récupérer rapidement le chiffre d'affaires perdu.

Facteurs influençant les décisions des consommateurs en ligne

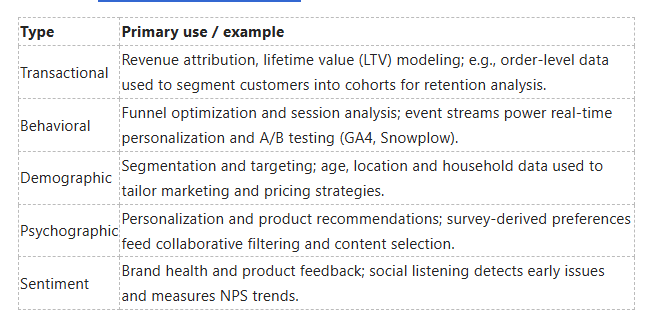

Le prix reste un facteur déterminant, mais il est également important de prendre en compte les indicateurs de confiance (avis, notes), la praticité (paiement en un clic, livraison rapide) et le risque perçu (politique de retour, sécurité des paiements). Les données montrent que les consommateurs comparent fréquemment les prix entre les différents vendeurs et se fient à la preuve sociale ; par conséquent, vos pages produits doivent mettre en avant les avis et des informations de livraison claires afin de lever les hésitations.

La personnalisation et la pertinence sont essentielles : les clients qui reçoivent des recommandations sur mesure se convertissent davantage, tandis que les expériences non pertinentes ou génériques augmentent le taux d'abandon de panier. Il est donc nécessaire de combiner les données comportementales, l'historique d'achats et les signaux présents sur le site pour proposer un contenu dynamique qui correspond aux intentions de l'utilisateur et améliorer la conversion .

- Sensibilité aux prix – la tarification dynamique et les promotions ont un impact direct sur la demande à court terme.

- Preuve sociale : les évaluations et le contenu généré par les utilisateurs influencent la confiance et la qualité perçue.

- Performance du site web : la vitesse de chargement des pages et la disponibilité du site influencent les premières impressions et les taux de rebond.

- Optimisation mobile : des mises en page pensées pour les mobiles et des processus de paiement simplifiés réduisent les abandons de panier.

- Confidentialité et sécurité : des politiques claires et des labels de paiement fiables influencent le risque perçu.

- Cette attente en matière de satisfaction client – livraison rapide et retours faciles – détermine souvent la décision d'achat finale.

Pour agir sur ces facteurs, il est conseillé de mettre en place des tests et des cohortes : réaliser des tests A/B sur différents niveaux de prix, tester différents emplacements pour les avis clients, mesurer l'impact des seuils de livraison gratuite et suivre les conversions par source de trafic afin de quantifier cet impact. Un indicateur souvent utilisé est qu'améliorer le temps de chargement d'une page d'une seule seconde peut réduire le taux de rebond et augmenter significativement les conversions. Par conséquent, les optimisations de performance offrent souvent un retour sur investissement élevé lorsqu'elles sont priorisées par rapport aux dépenses marketing.

- Tests A/B : utilisez des tests statistiques valides pour isoler les effets causaux sur la conversion.

- Analyse de cohorte – évaluation de l'évolution du comportement des clients fidèles par rapport aux nouveaux acheteurs.

- Segmentation – les micro-segments (par exemple, les utilisateurs mobiles à forte valeur vie client) révèlent différentes affinités avec les produits.

- Modèles d'attribution aux canaux qui génèrent réellement des revenus.

- Cette itération basée sur les données – tests et mesures continues – permet d'obtenir des améliorations cumulatives en matière de conversion et de fidélisation.

Le rôle de la psychologie dans les achats en ligne

Les facteurs psychologiques influencent les décisions bien plus qu'on ne le pense : la rareté, l'ancrage, le cadrage et les normes sociales modifiant tous la disposition à payer et le sentiment d'urgence. Par exemple, les mentions d'urgence comme « il n'en reste que 3 » et les promotions à durée limitée tirent partie de la rareté et augmentent souvent le taux de conversion de plus de 10 % lors de tests contrôlés, notamment pour les achats impulsifs où la réflexion est primordiale.

L'architecture des choix est essentielle : réduire les options, mettre en avant une référence recommandée ou présenter les différents niveaux de prix avec une option « meilleur rapport qualité-prix » clairement indiquée simplifier les décisions et augmenter le panier moyen. Il est également important de communiquer sur la prévention des pertes (retours gratuits ou garantie de remboursement) afin de rassurer les clients potentiels et d'améliorer les taux de conversion auprès des nouveaux clients.

En créant davantage, vous pouvez appliquer une segmentation comportementale : cartographiez les clients qui réagissent à la preuve sociale par rapport à ceux motivés par les promotions, puis adaptez les bannières, le contenu des e-mails et les invitations de paiement en conséquence afin que vos incitations UX correspondent aux déclencheurs de décision de chaque segment.

L'importance des données dans la connaissance des consommateurs

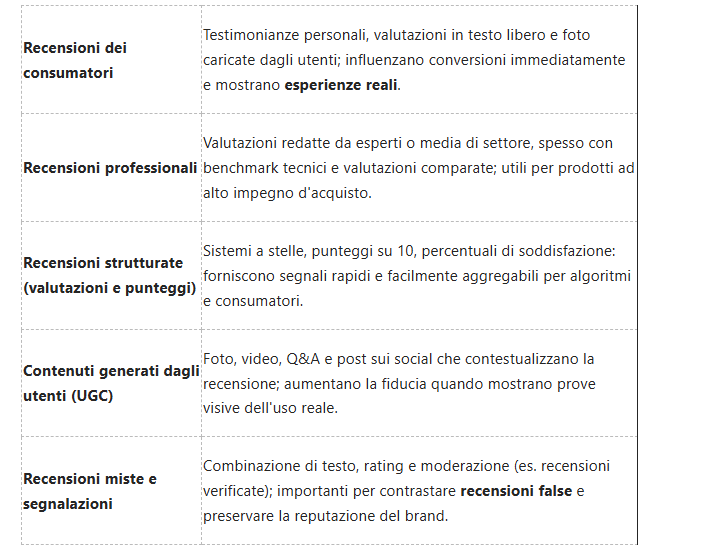

Types de données dans le commerce électronique

En général, les données sont segmentées en plusieurs catégories opérationnelles : enregistrements d'achats bruts, flux d'événements comportementaux, attributs démographiques, indicateurs psychographiques issus d'enquêtes et sentiment extrait des avis et mentions sur les réseaux sociaux. Les journaux transactionnels contiennent les achats au niveau de la référence produit, les horodatages et les identifiants de canal – ce qui peut représenter de 10 à 50 millions de lignes par an pour un détaillant de taille moyenne – tandis que les flux d'événements comportementaux (pages vues, ajouts au panier, validations de commande) sont idéalement saisis sous forme de schémas événementiels pour faciliter l'analyse du parcours client et l'attribution.

Les sources qualitatives, comme les avis en texte libre et les transcriptions de l'assistance, complètent les données numériques : le traitement automatique du langage naturel permet de convertir les sentiments et les intentions en caractéristiques pour les modèles prédictifs. Utilisez la liste ci-dessous pour garder les priorités visibles lors de la conception des pipelines :

- Données transactionnelles

- Données comportementales/événementielles

- Données démographiques

- Données psychographiques

- Écoute des sentiments et des réseaux sociaux

Knowing how to combine these sources - for example joining transactional LTV with behavioral churn signals and sentiment trends - lets you build models that commonly increase predictive accuracy above baseline by 20-40% in forecasting purchase probability.

Collecting Consumer Data: Methods and Tools

You should instrument both client-side and server-side touchpoints: use an event-based analytics setup (GA4, Mixpanel, Snowplow) for front-end interactions, server logs and order APIs for authoritative transactional records, and a customer data platform (Segment, RudderStack) to route events into your warehouse. Capture consistent event schemas (user_id, session_id, timestamp, event_name, product_id) to avoid costly ETL mapping later - inconsistent schemas are a common source of 30-50% of pipeline failures.

Complement passive capture with active collection: short post-purchase surveys (2-3 questions) raise response rates above 15% when offered with an incentive, and in-depth user interviews uncover intent drivers that quantitative data misses. For tooling, pair real-time analytics (Hotjar, FullStory) for behavior replay with batch processing (BigQuery, Snowflake) for model training; orchestration via Airflow or dbt keeps transformations auditable.

More specifically, you can reduce data latency to under an hour by routing events through a streaming stack (Kafka → Snowplow → warehouse) and improve model freshness: several retailers refresh propensity scores daily to support dynamic offers and have seen conversion uplifts of ~8-12%.

Ethical Considerations in Data Collection

You must design collection with privacy and compliance as architecture requirements rather than add-ons: implement consent banners that map to your event schema, maintain purpose limitation, and enforce retention schedules. Under EU law, noncompliance can result in fines up to €20 million or 4% of global turnover, and U.S. state-level regulations like CCPA can impose statutory penalties per violation - these are operational risks that impact budgets and reputation.

Apply privacy-preserving techniques where possible: aggregate or anonymize identifiers, use hashed IDs with rotating salts, and consider differential privacy for published analytics slices. From a trust perspective, transparent opt-in flows and simple data-access controls increase customer opt-in rates; firms that explicitly communicate benefits of data use commonly report double-digit increases in consent rates.

More operationally, perform periodic privacy impact assessments, limit access via role-based controls, and log data usage so you can demonstrate compliance during audits while minimizing re-identification risks and protecting your brand.

Analyzing Consumer Trends

Utilizing Descriptive Analytics

When you slice historical data, start with funnel and cohort metrics to ground decisions in measurable behavior: average ecommerce conversion rates hover around 2-3%, but cohorts can vary by an order of magnitude - for example, a new mobile cohort might convert at 0.8% while a repeat desktop cohort converts at 6%. Use cohort retention curves, daily active users (DAU) and weekly retention to reveal whether a spike in traffic is sustainable or just a transient campaign effect; this lets you prioritize fixes that improve lifetime value (LTV) rather than chasing one-off acquisition spikes.

You should also instrument event-level data so that aggregate reports don't hide behavior variance: segment by channel, product category, and UX variant to catch hidden friction. A/B test logs combined with descriptive dashboards let you quantify real-world impact - companies that operationalize cohort and funnel reporting often cut customer churn by addressing the top 10% of drop-off steps first, since those steps are typically responsible for the majority of revenue leakage.

The Role of Predictive Analytics

Predictive models turn the patterns you've observed into forward-looking signals: time-series forecasting (Prophet, ARIMA, XGBoost) for weekly demand, classification models for churn risk, and uplift models for targeting incremental responders. You should prioritize models based on ROI - for instance, a churn classifier that identifies the top 5% highest-risk customers can guide retention offers that may deliver a 5-15% lift in retention for that segment when paired with the right treatments.

Feature engineering matters more than fancy algorithms: recency, frequency, monetary (RFM) scores, velocity of search interest, and product-view depth are often the top predictors of short-term purchase likelihood. In practice, retailers use ensembles of gradient boosting and survival analysis to predict both the probability and timing of next purchase, enabling inventory planning and dynamic promotions that reduce stockouts and markdowns.

Be aware of non-technical risks: predictive systems can amplify bias in historical data and expose you to privacy backlash if targeting is too granular - the famous case where a retailer inferred pregnancy status is an example of dangerous misuse that cost reputation. Mitigate this by adding privacy-preserving constraints, using explainability tools for model decisions, and A/B testing predictive interventions before scaling.

Tools for Consumer Trend Analysis

You'll typically combine an event pipeline, a data warehouse, and analytics/ML tooling: common stacks include GA4 or server-side event collection → BigQuery or Snowflake → Looker/Tableau/Metabase for BI, and Python/R or Databricks for modeling. For product analytics, Amplitude and Mixpanel provide built-in funnel, cohort and retention reports; enterprises often layer these with a centralized warehouse to run custom joins and ML at scale.

When choosing tools, weigh cost and scale: GA4 and Metabase have low entry costs but limited ML integration, while Snowflake or BigQuery add storage/compute costs (for example, BigQuery charges per TB scanned) but enable complex joins across sessions, orders and CRM records. You should also consider experimentation platforms like Optimizely or an internal experiment framework - Booking.com is a notable example that runs thousands of daily experiments to continuously validate hypotheses.

For implementation, standardize on a canonical event schema (user_id, event_name, timestamp, product_id, price, channel) to avoid ETL drift, and pair that with orchestration tools (Airflow, dbt) so your dashboards and models remain reproducible; this reduces time-to-insight when you scale from 10K to 10M events/day and need reliable, low-latency signals for both real-time personalization and weekly forecasting.

Sources of Data for Trend Analysis

Website Analytics

When you dig into your web analytics, focus on event-level and funnel metrics: sessions, conversion rate, average order value (AOV), cart abandonment, and micro-conversions like add-to-cart or newsletter sign-up. In practice, GA4’s event model gives you raw-event granularity so you can track that a specific campaign UTM drove a 12% higher add-to-cart rate; pair that with heatmaps and session recordings from tools like Hotjar to see where users hesitate. High bounce rates (over 60%) on key landing pages and sudden drops in conversion rate are immediate signals that require A/B testing or UX fixes.

Segmenting by traffic source, device, and cohort is how you find patterns that matter: compare organic vs. paid cohorts over 30-, 60-, and 90-day windows to detect retention shifts, and run A/B tests with a minimum sample size to reach statistical significance (commonly p<0.05). Server-side tracking and first-party data capture mitigate ad-blocker sampling; for example, a mid-market retailer recovered ~18% of lost tracked revenue after implementing server-side tagging and fixing misattributed referral traffic.

Social Media Metrics

Start with reach, impressions, engagement rate, share of voice, and sentiment, and then layer in qualitative signals from comments and DMs. Engagement benchmarks vary by platform-Instagram posts often target a 1-3% engagement rate while TikTok normal engagement can exceed 6% for short-form content-so you should set platform-specific KPIs. Use tools like Brandwatch, Sprout Social, and native analytics to spot spikes: a sudden viral spike in mentions can lift brand searches and short-term sales, but it can also amplify negative sentiment if not managed quickly.

Social listening differs from native metrics: it aggregates public mentions across forums, blogs, and channels and applies NLP to surface trending topics and sentiment. For reliable trend detection, you typically want at least several hundred mentions per week; NLP models often report sentiment accuracy between ~70-85%, so you should manually validate clusters that show escalating negative sentiment. An FMCG brand that flagged 1,200 negative mentions about packaging within a week used that insight to change copy and design, cutting returns by 12% the next quarter.

To convert social signals into forecasts, map organic and paid activity to cohorts with UTM-tagged landing pages and use time-series regression or Granger causality tests to identify leading indicators; for instance, one retailer found a consistent three-week lead where trending TikTok views predicted a 7% uplift in weekly sales. Exercise caution interpreting correlation as causation-control for seasonality, paid spend, and offline campaigns when you model social effects.

Market Research Reports

Use syndicated and custom reports from providers like Nielsen (panels of ~40,000 households), Euromonitor, Forrester, and Statista to get category-level benchmarks, TAM/SAM/SOM estimates, and long-term CAGR forecasts-Euromonitor projected a ~5% CAGR for global e‑commerce apparel over 2024-2029 in recent coverage. Executive summaries are often free, but full datasets can cost thousands to tens of thousands of dollars; paywall and proprietary methodology mean you must scrutinize sample sizes and weighting schemes before you apply numbers to your forecasts.

Triangulate these reports with your first-party data: use market-share benchmarks and consumer preference stats (e.g., a Mintel study showing ~68% of respondents prioritize sustainability) to validate demand assumptions and product roadmaps. Reports frequently lag current behavior by 3-6 months, so combine them with real-time signals from web and social analytics to avoid relying solely on stale inputs.

If you commission custom research, plan for statistically meaningful sample sizes (n=1,000 typically yields ~±3% margin of error at 95% confidence) and mix quantitative surveys with qualitative focus groups for nuance. Ensure survey design minimizes bias and includes clear demographic quotas, because poorly designed primary research can mislead product and pricing decisions despite high costs.

Segmenting Online Consumers

Demographic Segmentation

Demographics give you the cleanest, fastest slices: age cohorts, gender, household income, education, and geography. For example, Gen Z (born 1997-2012) and Millennials (born 1981-1996) often dominate mobile-first purchases and social-commerce channels, while buyers aged 45+ tend to convert more on desktop and email-driven campaigns. Use demographic overlays on your CRM and ad platforms to see where conversion rates and average order values diverge; in many e-commerce verticals, customers in the >$100k household-income bracket can produce 20-40% higher AOV than lower-income segments.

Segmenting by location also reveals large behavioral differences: metropolitan ZIP codes may show higher lifetime value but also higher CAC. Combine demographic filters with device and channel data to avoid waste - misaligned creatives or landing pages for the wrong age group can increase bounce rates and inflate ad spend by up to 30% if you target broadly without segmentation.

Behavioral Segmentation

Behavioral segments come from what users actually do: frequency of visits, recency of purchase, product categories viewed, cart-abandonment events, and post-purchase behaviors. Implement RFM (recency, frequency, monetary) scoring to separate first-time browsers, repeat buyers, and lapsed customers; you’ll find that high-frequency, low-recency cohorts often drive the bulk of short-term revenue while high-monetary, low-frequency cohorts are your best candidates for loyalty programs.

Actionability is the advantage here: target cart abandoners with triggered email sequences and you can typically recover 10-15% of otherwise lost orders; push personalized recommendations based on browsing history and watch click-through and conversion rates climb. Also track micro-conversions (add-to-wishlist, video views) as predictive signals for purchase intent, then feed those signals into lookalike audiences for acquisition.

For implementation, instrument event tracking in tools such as GA4, Mixpanel, or Amplitude and run cohort analyses weekly to spot churn or engagement shifts; model-based approaches (logistic regression or tree-based models like XGBoost) will let you predict purchase propensity and assign customers to lifecycle-based journeys. Don’t ignore privacy constraints - comply with GDPR/CCPA when using behavioral identifiers and always provide opt-outs.

Psychographic Segmentation

Psychographics let you target the why behind behavior: values, interests, lifestyles, and personality traits. Segmenting by psychographic attributes-such as sustainability-minded shoppers, bargain hunters, or brand-loyal enthusiasts-enables messaging that resonates emotionally; studies and A/B tests often show that psychographic-tailored creatives produce lifts in engagement and conversion, commonly in the 10-25% range depending on the product and channel.

Collect psychographic data through short onsite surveys, social-listening, product reviews, and ad-engagement signals. For instance, customers who interact with sustainability content and choose eco filters in checkout are a high-value segment for green product lines, so you should prioritize personalized content and product bundles for them. Combine psychographic tags with demographic and behavioral layers to create high-precision audiences for paid media and email.

To operationalize psychographics, apply NLP on open-text survey responses and social posts, then use clustering (k-means, hierarchical clustering, or embedding-based methods) to surface natural groupings; ensure sample sizes of at least a few hundred profiles per market to stabilize clusters and avoid overfitting your messaging to noisy signals.

Identifying Key Performance Indicators (KPIs)

Defining KPIs for E-Commerce

When you pick KPIs, prioritize measures that map directly to revenue and actionable levers: conversion rate (orders / sessions), average order value (AOV), customer lifetime value (CLTV), customer acquisition cost (CAC), repeat-purchase rate, return rate, and cart abandonment. For context, many benchmarks place global e-commerce conversion rates around 2-3% and cart abandonment near 69.6% (Baymard Institute), so tracking these lets you spot underperformance quickly.

Match KPIs to your business stage: early-stage brands often emphasize traffic quality and CAC payback (target payback within 12 months), growth-stage brands push AOV and CLTV (aim for a CLTV:CAC ratio near 3:1), and mature retailers layer in margin, return rates, and NPS. Use a mix of macro (revenue, orders) and micro KPIs (add-to-cart rate, checkout-start rate) so you can run experiments that tie UX changes to bottom-line impact.

Measuring Customer Engagement

Track both behavioral metrics and interaction signals: pages per session, session duration (many sites average ~2-3 minutes), frequency of visits, email open and click-through rates, product detail views, add-to-cart rate, and social/sharing actions. High bounce rates (>40-60% depending on traffic source) or low pages-per-session are danger signals that your acquisition or landing experience isn't meeting expectations.

Complement quantitative metrics with qualitative indicators like product reviews, on-site search queries, chat transcripts, and CSAT scores. Users who return for multiple sessions typically convert at a higher rate-repeat visitors can convert at roughly 2-3x the rate of first-timers-so segment engagement by cohort and channel to prioritize reactivation tactics.

Use event-level tracking in tools such as GA4, Mixpanel, or Amplitude and tie events to user IDs so you can build engagement scores (for example, weight add-to-cart = 2, repeat visit = 3, purchase = 10). Be mindful that misconfigured events or missing UTM parameters will erode your engagement signals and lead to bad decisions, while a reliable engagement score lets you automate re-engagement campaigns and lifecycle messaging.

Analyzing Conversion Rates

Calculate conversion rate as conversions divided by relevant sessions and look beyond the headline: measure conversion at each funnel step (landing → product → add-to-cart → checkout → purchase) and for each segment (device, source, campaign). Industry patterns show desktop often outperforms mobile (mobile conversions commonly near ~1-1.5% vs desktop near ~3-4%), so device-level analysis is vital for prioritizing mobile UX fixes.

Run funnel and cohort analyses to locate drop-off points: if checkout-start-to-purchase conversion is low but add-to-cart is healthy, focus on payment, shipping cost, and form friction. A/B tests on checkout simplification or express payment options frequently return double-digit gains; for example, reducing required fields or enabling saved payment methods often yields 10-30% uplifts in checkout conversion in published case studies.

When you design experiments to improve conversion, plan sample sizes to detect realistic effects-detecting a 10% relative lift on a 2% baseline (to 2.2%) typically requires tens of thousands of sessions to reach statistical power. Avoid stopping tests early; premature conclusions inflate false positives, whereas properly powered experiments give you dependable, repeatable conversion gains.

Techniques for Predictive Modeling

Machine Learning Algorithms in Consumer Analysis

You can begin with interpretable models such as logistic regression or decision trees to establish baselines-these often reveal which features (RFM scores, session length, referral source) drive conversion before you move to complex models. Tree-based ensembles like Random Forest and XGBoost typically deliver the best out-of-shelf performance for click-through and purchase prediction; practitioners routinely see AUC improvements from ~0.72 to ~0.82 when replacing linear baselines with boosted trees on behavioral datasets of 100k+ users. Feature engineering matters more than model choice: create lagged features, session embeddings, categorical target encodings, and aggregate signals (7-day rolling conversion rate, user tenure buckets) to capture behavior patterns that models alone won't infer.

You should guard against overfitting and data leakage by enforcing strict time-based cross-validation (time folds or rolling windows) and by testing models on future holdouts; use calibration (Platt or isotonic) when probabilities feed personalization rules. For recommendation tasks, combine collaborative filtering (matrix factorization, ALS) with content-based or embedding approaches; Netflix-style hybrid models and deep learning embeddings often boost relevance in sparse item catalogs. Finally, apply interpretability tools like SHAP to produce actionable insights for product and marketing teams and set up automated drift detection so a model trained on Q1 behavior doesn't degrade in Q3 after a product change.

Time Series Analysis for Trend Prediction

You should decompose signals into trend, seasonality and residuals before selecting a forecasting algorithm: use STL or seasonal decomposition to identify weekly or yearly cycles and run an ADF test to check stationarity, applying differencing when necessary. Traditional methods-ARIMA/SARIMA and Holt-Winters-excel at short-term, well-behaved series; Facebook Prophet or SARIMAX with holiday/exogenous regressors works well when promotions or holidays cause systematic spikes. For example, retailers that add holiday regressors often reduce MAPE by ~5-8 percentage points compared to naive seasonal models.

When you need to capture non-linear temporal dependencies or multi-step horizons, leverage LSTM/Temporal CNNs or Transformer-based time series models on sequences of user-level or aggregate signals; these models can incorporate covariates like price changes, ad spend, and inventory levels as exogenous inputs. Evaluate forecasts with rolling-window cross-validation and metrics such as MAPE, RMSE, and coverage of prediction intervals, and produce prediction intervals (e.g., 90% PI) so stakeholders understand uncertainty around capacity planning or campaign pacing.

For complex catalogs, implement hierarchical forecasting and reconciliation (MinT) so product-, category-, and total-level forecasts are consistent; also consider ensembles (SARIMA + Prophet + LSTM) which often reduce error by 10-20% versus single-model forecasts. You should explicitly model promotion lift with holdout tests or uplift estimates, and use backtesting across multiple seasonal cycles (12+ months) to validate model robustness before deploying forecasts to demand-sensing or dynamic pricing systems.

A/B Testing for Consumer Preferences

You must design experiments with adequate statistical power and pre-specified metrics: compute sample size using baseline conversion, desired minimum detectable effect (MDE), significance (α) and power (1-β). For instance, with a baseline conversion of 5% and an MDE of 0.5 percentage points at 80% power and 95% confidence, you need roughly ~29,800 users per variant. Protect results from false positives by controlling for multiple comparisons (Bonferroni or Benjamini-Hochberg) when running many variants or segments.

You should avoid peeking and ad-hoc stopping rules that inflate Type I error; either fix sample size and duration up front or use properly corrected sequential methods (alpha-spending, group sequential) or Bayesian approaches with pre-registered stopping criteria. For personalization, implement uplift modeling or heterogenous treatment effect estimation so you can target variants to segments that gain the most value, and consider switching to multi-armed bandits only after you have stable metrics and understand long-term effects on retention, not just short-term conversion.

Operationally, validate instrumentation (event definitions, deduplication, consistent attribution windows) and run experiments over full weekly cycles to avoid day-of-week bias; also include long-term metrics (LTV, churn) in analysis when a short-term lift might reduce downstream value. You should document stopping rules and publish confidence intervals or credible intervals alongside p-values to give stakeholders a balanced view of both effect size and uncertainty.

Harnessing Big Data for Consumer Insights

Understanding Big Data in E-Commerce

When you combine transaction logs, clickstream events, mobile telemetry and social feeds, you build a data substrate that captures both intent and context at scale. That mix of volume, velocity and variety lets you detect micro-segments, predict purchase windows and quantify lifetime value with far greater resolution than traditional panel surveys; for example, integrating clickstream and purchase history often boosts segmentation accuracy by double digits and can increase conversion rates by 10-30% in targeted campaigns.

Because much of that data is unstructured, you will rely on NLP for reviews and chat logs, and on session-level analytics to map funnels and drop-off points. At the same time, you must treat privacy and governance as operational constraints: poorly managed identifiers or model leakage can create compliance exposure and reputational risk, while properly anonymized and consented datasets enable predictive models that raise average order value and reduce churn.

Tools and Technologies for Big Data Analysis

To process and store large datasets you typically choose between distributed file systems and cloud data lakes such as AWS S3, Azure Data Lake or Google Cloud Storage, and pair them with processing engines like Apache Spark or Apache Flink for batch and streaming. For analytics at petabyte scale you’ll see platforms like BigQuery, Snowflake or Amazon Redshift, which are designed to run complex SQL scans across massive tables in seconds to minutes depending on query complexity.

On the ingestion and orchestration side, Apache Kafka and cloud stream services (Kinesis, Pub/Sub) are common for high-throughput eventing (often handling hundreds of thousands to millions of messages per second in production clusters), while Airflow or managed workflow services coordinate ETL, feature builds and model retraining. For modeling you’ll use frameworks like XGBoost, TensorFlow or PyTorch alongside MLOps tooling such as MLflow or Databricks for experiment tracking and deployment.

More operational detail matters: you should adopt a feature store (e.g., Feast or Databricks Feature Store) to ensure consistency between training and serving, implement model monitoring to detect concept drift, and apply data cataloging and lineage for auditability and regulatory compliance (GDPR/CCPA). These pieces typically reduce time-to-production for new models and cut retraining friction substantially.

Case Studies on Big Data Applications

Several large-scale deployments illustrate how you can apply big data across commerce, logistics and media. Companies that combine high-frequency event capture with robust feature engineering tend to realize the fastest returns: recommendations, dynamic pricing and supply-chain optimization regularly produce measurable revenue and cost improvements when driven by reliable streaming data and continuous model evaluation.

Ethical and privacy trade-offs surface in these implementations as well; you must balance personalization gains against data minimization and transparency to maintain customer trust and avoid fines.

- Amazon - moteur de recommandation : il générerait environ 35 % du chiffre d’affaires total en proposant des suggestions de produits personnalisées basées sur l’historique des achats, le comportement de navigation et le filtrage collaboratif.

- Alibaba - Singles' Day (2019) : a géré une demande massive de ventes flash, générant environ 268 milliards de RMB de valeur brute de marchandises en combinant l'inventaire en temps réel, le profilage des utilisateurs et le ciblage dynamique des promotions sur des millions de sessions.

- Netflix - personnalisation : utilise les signaux de visionnage et les tests A/B pour adapter les recommandations ; selon les estimations du secteur, ses systèmes de recommandation et de personnalisation génèrent environ 1 milliard de dollars de revenus/valeur par an.

- UPS - optimisation des itinéraires (ORION) : application d'analyses au niveau des itinéraires et d'algorithmes d'optimisation pour économiser environ 300 millions de dollars par an en carburant et en coûts opérationnels grâce à un routage plus efficace.

- Objectif – analyse prédictive des événements du cycle de vie : utilisation des habitudes d’achat pour identifier les principaux signaux des étapes de la vie (par exemple, la grossesse) avec des taux de précision du modèle rapportés de l’ ordre de 70 à 80 % , démontrant à la fois la puissance et la sensibilité à la vie privée des modèles comportementaux.

Ces exemples illustrent comment différents objectifs commerciaux correspondent à différentes architectures : vous aurez besoin de flux à faible latence pour la personnalisation, d’un OLAP à grande échelle pour l’analyse de cohortes et d’une gouvernance robuste pour atténuer les risques liés à la confidentialité. Les choix d’implémentation, tels que les pipelines de fonctionnalités en temps réel par rapport au traitement par lots nocturne, influencent directement l’impact mesurable sur la conversion, la fidélisation et les coûts.

- Booking.com – l’expérimentation à grande échelle : réalise des dizaines de milliers de tests A/B chaque année pour optimiser l’expérience utilisateur et la tarification, ce qui entraîne des augmentations de revenus progressives mesurées en pourcentages à un ou deux chiffres pour les flux clés.

- Uber – Tarification et répartition dynamiques : exploite la télémétrie urbaine en temps réel et les modèles de prévision de la demande pour équilibrer l’offre et la demande ; les systèmes de production traitent des millions d’événements par seconde et ajustent la tarification et la mise en relation pour améliorer l’utilisation.

- Spotify - recommandations basées sur la consommation : combine le contexte d’écoute, les filtres collaboratifs et l’intégration de contenu pour augmenter la durée des sessions et les indicateurs de découverte, contribuant ainsi de manière significative aux KPI d’engagement.

- Walmart - Prévision des stocks : intègre les données des points de vente, la météo et les événements locaux pour réduire les ruptures de stock et améliorer la rotation des stocks ; des modèles de prévision avancés ont permis de réduire les pertes de ventes et de diminuer les coûts de stockage dans les régions pilotes.

- Chaîne de magasins X - promotions ciblées : une épicerie de milieu de gamme a utilisé les données de transaction et de fidélité pour diffuser des coupons hyper-ciblés, obtenant ainsi une augmentation de 20 à 30 % des taux d'utilisation et une augmentation mesurable de la taille du panier pour les cohortes ciblées.

Écoute sociale et analyse du sentiment des consommateurs

Defining Social Listening

When you implement social listening, you move beyond isolated mentions and build a continuous feed of public signals - posts, comments, reviews, and forum threads - that reveal how conversations about your brand, products, and competitors evolve over time. You should treat it as both a signal detection system and an input to your analytics pipeline: track volume, velocity, and source so you can spot a sudden spike (for example, a 200-400% jump in mentions after a viral post) that demands immediate action. Real-time monitoring plus historical trend analysis lets you detect sentiment shifts before they hit conversion metrics.

You will differentiate listening from simple monitoring by adding context: intent, channel, and topic categorization. For instance, grouping mentions by product SKU or campaign tag lets you see that a soft-launch produced 12% more praise on Reddit but 18% more complaints on Instagram, which informs channel-specific fixes. Actionable categorization - not raw mention counts - is what turns social data into product decisions.

Tools for Social Listening

You can choose between SaaS platforms (Brandwatch, Sprout Social, Meltwater), social management suites (Hootsuite), and bespoke stacks built on streaming APIs plus Elasticsearch/Kibana for search and visualization. Enterprise tools typically ingest millions of mentions per day, provide prebuilt classifiers for language and sentiment, and surface influencers by reach; lightweight tools often limit you to keyword alerts and basic dashboards. Combining a commercial tool for coverage with open-source components for custom analytics is a common pattern.

Pricing and feature tiers vary widely: expect starter plans under a few hundred dollars per month for single-user monitoring, while full enterprise solutions with historical archives, multilingual NLP, and SLA-backed support often run into the thousands monthly. You should evaluate tools on three practical criteria - query fidelity (boolean/regex support), exportable raw data, and integration options (webhooks, data warehouse connectors) - since those determine how easily you operationalize insights.

You must also consider compliance and data governance: tools that provide off-platform archival, PII redaction, and configurable retention windows will reduce legal risk when you store social text in your data lake. API access and ETL readiness matter because ingest pipelines that normalize handles, deduplicate reposts, and tag language at scale save hours of noisy preprocessing.

Analyzing Consumer Sentiment

You should apply layered sentiment techniques: start with lexicon-based scoring for speed, add machine-learning classifiers for domain nuance, and deploy transformer models (BERT variants) when you need to handle context, emojis, or sophisticated phrasing. Typical performance ranges start around 60-75% accuracy for generic lexicons, rising to 75-90% for supervised models trained on domain-specific labels; sarcasm and mixed sentiment remain common failure modes, so measure precision and recall, not just overall accuracy.

You will make sentiment actionable by combining polarity scores with volume and influence: compute a weighted sentiment index where mentions are weighted by user reach or likelihood to convert, then set thresholds for alerts (for example, flag a campaign when weighted negative sentiment increases by >5 percentage points week-over-week). Correlate those signals with conversion rates and support tickets to prioritize remediation - a 6-8 point negative swing that coincides with a 10% drop in add-to-cart is an operational priority.

To maintain model reliability, incorporate human-in-the-loop validation: sample and label new mentions weekly, track drift metrics, and retrain when F1 performance drops beyond pre-set bounds. Labeling density matters - aim for several thousand annotated examples per major language or product line to reach stable performance, and use continuous evaluation against holdout sets to avoid silent degradation.

Implementing Data-Driven Marketing Strategies

Personalization Techniques

When you apply personalization at scale, you can see measurable lifts: targeted product recommendations commonly produce 10-30% higher average order value compared with generic catalogs. Use a hybrid of collaborative filtering for behavioral similarity and content-based models for new items, then deploy real-time rules that alter homepage banners, email subject lines, and checkout cross-sells based on session intent signals (search queries, cart contents, dwell time). Split-test recommendation placement and algorithm variants; for example, an A/B test that moved a “recommended for you” module above-the-fold increased CTR by 18% in one mid-market retailer.

Prioritize first-party signals and an identity graph to link web, app, and CRM touchpoints so your personalization models avoid over-relying on third-party cookies. Keep privacy and consent in the loop: segment models by opted-in audiences and create fallback personalization for anonymous users (e.g., category-level affinities). Operationalize a feedback loop where purchase outcomes retrain your ranking model weekly, and monitor lift by cohort to detect model drift.

Targeted Advertising

Programmatic channels now capture well over 70% of display ad spend, so integrate demand-side platforms (DSPs) with your data pipeline to push audiences in near real-time. Build layered audiences-combine recency (last 7 days), frequency (2+ sessions), and predictive LTV (top 20%)-and apply bid multipliers to prioritize high-value segments. For example, you might bid 2x for users in the top decile of predicted LTV while capping bids for low-value cohorts to protect CAC.

Use lookalike modeling on hashed CRM segments to expand reach without diluting quality; calibrate the similarity threshold to balance scale vs. precision. Monitor CPM, CTR, and especially downstream metrics like CPA and ROAS; when you see increasing CPMs due to competition, shift to creative or placement optimization-carousel creatives and native placements often reduce CPM by 15-25% relative to standard banners.

Address platform and privacy changes proactively: since iOS ATT and cookie deprecation limit deterministic targeting, prioritize server-side matching, first-party event ingestion, and contextual signals (page topic, time of day) to preserve performance. Also implement rigorous attribution windows and incrementality tests (holdout groups) so you can quantify the real lift from targeted ad spend rather than relying solely on last-click metrics.

Retargeting Strategies

Given typical cart abandonment rates of around 60-80%, retargeting is where you recover high-intent users efficiently-start with dynamic product ads that pull SKU, price, and image from your feed and show the exact item a user viewed. Segment your retargeting windows: use 1-7 day windows with higher-frequency caps for low-consideration items, and extend to 30-90 days for high-ticket goods while sequencing creative from reminder → social proof → discounts.

Implement frequency capping and creative rotation to prevent ad fatigue; a common rule is to cap impressions to 6-10 per user per week and shift messaging on impression 3 (e.g., from awareness to urgency). Combine on-site signals (product viewed, time on page) with behavioral triggers (cart add but no checkout) to prioritize audiences-this often reduces CPA versus broad retargeting lists by a meaningful margin.

Increase ROI by integrating retargeting with email and push channels: suppress users who converted in the last 24-48 hours, and use cross-channel sequencing so that paid ads reinforce email messages rather than repeat identical offers. Maintain a suppression list from CRM to avoid wasted spend and compliance issues, and routinely test window lengths and discount thresholds to find the optimal balance between conversion lift and margin erosion.

The Future of Online Consumer Trends

Emerging Technologies Impacting Consumer Behavior

AI-driven personalization will continue to dictate purchase paths: recommendation engines currently account for roughly 35% of Amazon’s purchases, and similar systems can lift conversion rates by 5-15% when implemented across product pages and cart flows. You’ll see generative AI produce dynamic product descriptions, tailored email copy, and real-time cross-sell suggestions; combining these with conversational agents lets you resolve routine queries at scale - chatbots already handle as much as 70% of simple customer interactions in some deployments, cutting response time while increasing engagement.

Augmented reality and virtual try-ons reshape intent by reducing uncertainty: brands like Sephora and IKEA use AR to let shoppers visualize products in context, which directly improves conversion and reduces returns in beauty and furniture categories. At the same time, blockchain-based provenance and IoT-enabled product telemetry give you verifiable supply-chain data that consumers value for high-ticket or sustainability-driven purchases; however, these gains come with heightened privacy and security risk, so you must enforce robust encryption and consent flows to avoid regulatory fines and reputational damage.

Predictions for E-Commerce

Mobile-first commerce will continue to dominate-already exceeding desktop in traffic-and you should expect mobile to represent a majority of transactions by default, pushing you to optimize for speed and one-tap checkout. Subscription and recurring-revenue models will expand beyond media and FMCG into categories like personal care and household goods, driving stronger lifetime value (LTV) metrics; McKinsey-style analyses suggest tailored personalization can deliver a 5-15% revenue uplift, so prioritizing real-time decisioning systems pays off. Marketplaces and DTC hybrids will coexist: you’ll need to sell where customers discover products while maintaining your branded experience to protect margins.

Operationally, expect investments in headless and composable commerce to accelerate: these architectures shorten time-to-market for experiments and integrations, enabling faster A/B testing and feature rollout. Delivery expectations will tighten-same-day and sub-24-hour options will be table stakes in dense markets, pushing you to adopt micro-fulfillment centers or dark stores; meanwhile, sustainability will continue to influence purchase decisions, with roughly two-thirds of consumers indicating preference for greener options, which affects packaging, shipping choices, and supplier selection.

More info: prioritize a phased roadmap-start with a 6-12 month personalization pilot tied to a measurable KPI (e.g., a target 10% conversion uplift in the pilot segment), then scale based on statistical lift and operational readiness. Allocate budget to both experimentation (A/B, holdout cohorts) and infrastructure (data pipelines, model ops) so you can iterate quickly without sacrificing stability.

Adapting to Changing Consumer Needs

You must build feedback loops that turn behavioral signals into product and marketing actions within days, not quarters: implement event-driven analytics, real-time segments, and automated lifecycle campaigns triggered by behavior (cart abandonment, browse-to-buy, post-purchase). Inventory transparency and flexible fulfillment options-buy online pickup in store (BOPIS), curbside, and rapid returns-will materially affect churn; retailers who improve fulfillment transparency typically see lower return friction and higher repeat purchase rates, but failure to adapt creates operational bottlenecks and lost revenue.

Data governance and privacy-forward design are core to maintaining trust as you personalize: shift to first-party data strategies, granular consent, and privacy-preserving modeling (differential privacy, federated learning) so you can maintain personalization without risking compliance violations. Cross-functional playbooks-marketing, product, supply chain, and CX-must align on SLOs for experimentation, data quality, and time-to-insight to keep pace with consumer expectations.

More info: operationalize adaptation with a quarterly customer-journey audit, a 90-day experimentation backlog, and a single source of truth for customer profiles; set a goal to reduce time from insight to live test to under 30 days and measure success by lift per experiment and net promoter changes.

Challenges in Analyzing Consumer Trends

Data Privacy Concerns

You face mounting regulatory and technical constraints that change how you collect and link consumer signals: the EU’s GDPR (enforceable since 2018) allows fines up to €20 million or 4% of global turnover, while state laws like CCPA impose statutory penalties that can reach thousands per violation. Browser and platform shifts - Safari and Firefox blocking third-party cookies and platform-level changes like Apple’s App Tracking Transparency - have already degraded third-party identifier availability and forced many attribution models to lose signal quality within months.

You can mitigate exposure by embedding privacy-preserving architectures into your analytics stack: adopt consent management platforms, apply strong data minimization and retention policies, and evaluate techniques such as differential privacy, federated learning, and synthetic data for modeling. At the same time, prepare governance artifacts (data inventories, DPIAs) and incident plans so that when regulators audit or consumers complain, you can show documented, defensible practices rather than reactive fixes.

Data Interpretation Difficulties

Spurious relationships and confounding variables regularly mislead teams - correlation without causal proof causes expensive missteps, like increasing spend on channels that coincidentally rose during a promotion. Running dozens of A/B tests or slicing segments aggressively inflates the false positive rate; statistically, if you run 20 independent tests at α=0.05 you should expect about one false positive unless you adjust for multiple comparisons.

Instrumented metrics can be inconsistent: event taxonomy drift, duplicate tracking pixels, differing attribution windows, and patchy mobile-to-web stitching all change reported results from one week to the next. That variability makes KPI comparisons brittle and can shift perceived ROI between channels even when underlying consumer behavior is steady.

To reduce misinterpretation you should pre-register hypotheses, apply multiple-comparison corrections (Bonferroni or Benjamini-Hochberg where appropriate), and deploy causal inference techniques - difference-in-differences, synthetic controls, uplift modeling - plus robust holdout groups for attribution. Combine model explainability (SHAP, feature importance) with regular metric audits so you can trace whether a signal is real or an artifact of instrumentation or sampling bias.

Staying Relevant in a Fast-Paced Environment

Trends now emerge and peak in days on platforms like short-form video channels (TikTok crossed 1 billion MAUs in 2021), so you must compress the decision loop from weeks to hours if you want to capitalize. Rapid virality can create short-lived demand spikes that reward nimble inventory, dynamic creative, and immediate pricing or shipping changes; if you wait for quarterly reporting, you’ll miss the window to scale profitably.

You should build an operational playbook that supports fast experiments: automated near-real-time ETL, daily dashboards, anomaly detection alerts, and a test-and-learn budget for rapid merchandising and creative trials. Organize cross-functional squads that can take a social signal through validation, merchandising, and paid distribution inside a single sprint to turn early signals into measurable revenue before the trend decays.

Operationally, set concrete cadences - for example, daily anomaly monitoring, weekly model retraining for volatile categories, and a rapid feedback loop that measures conversion lift within 48-72 hours of campaign changes - and instrument KPIs like time-to-insight and test win-rate so you can quantify whether your processes are actually keeping pace with market velocity. Shortening latency and institutionalizing rapid validation is one of the highest-leverage moves you can make.

Best Practices for Data-Driven Decision Making

Analyzing and Interpretation Strategies

Start by blending statistical rigor with domain context: run hypothesis tests with at least 80% power, report effect sizes and confidence intervals, and avoid relying on p-values alone. You should segment analyses by cohort (e.g., acquisition channel, device, geography) to reveal heterogeneous effects - small average lifts often hide large gains in a specific segment, and lifts of 1-3% commonly require tens of thousands of observations to detect reliably.

Use model-agnostic interpretation tools (SHAP, partial dependence plots) for complex models so operations and product teams can act on outputs, and pair predictive work with causal techniques - difference-in-differences, propensity-score matching, or randomized pilots - to separate correlation from causation. Watch for data leakage, confounders, and lookahead bias; a model that performs well in-sample but suffers from these issues can produce dangerously misleading recommendations in production.

Integrating Insights into Business Strategy

Translate predictions into concrete actions by mapping model outputs to specific workflows and KPIs: convert a churn risk score into a prioritized outreach list with defined offers, or feed purchase propensity into real-time bid decisions for ads. You should set explicit targets (for example, a 10% relative reduction in churn within 90 days for the high-risk cohort) and measure the intact business metric, not just model lift.

Assign clear decision rights and build cross-functional squads so insights move from analysis into deployment: product owners own experiments, data engineers maintain feature pipelines, and marketers own creative A/B tests. Require an ROI projection before full rollout - pilots that show a single-digit percent lift in controlled tests can still be worthwhile if unit economics and scale justify automated rollout.

Operationalize by embedding outputs into systems: expose model scores via APIs, add them to CRM records, and create playbooks for each score band. For example, automate a three-step email + discount sequence for the top 5% highest-risk customers and track net lifetime value over 6 months to validate the strategy.

Continuous Monitoring and Adjustment

Implement end-to-end monitoring that tracks both model health (AUC, precision/recall) and business KPIs (conversion rate, revenue per user). Set concrete alert thresholds - for instance, alert when AUC drops by >0.03 or when feature distribution shift exceeds a predefined divergence - because silent model drift is one of the fastest routes to degraded business outcomes.

Adopt an MLOps cadence: shadow deployments, canary tests, and automated retraining pipelines with human signoff. Models in fast-moving environments typically need daily or weekly retraining, while stable models can be scheduled monthly or quarterly; balance retraining frequency against compute costs and the observed rate of data drift.

Further instrument your pipelines with golden datasets, data-quality gates (missingness, outliers), and post-deployment experiments: maintain rollback procedures and tag every model version with performance metadata so you can audit decisions and iterate quickly. Automated monitoring combined with clear escalation paths reduces downtime and preserves trust in data-driven decisions.

Final Words

Ultimately, you must treat data-driven insight as an iterative practice: collect clean, representative data, blend behavioral and contextual signals, and apply careful feature engineering so your models can forecast demand, churn, and conversion with actionable precision. Pair quantitative forecasts with qualitative validation, tie predictions to defined KPIs, and maintain transparent pipelines so stakeholders can assess assumptions and trust outcomes.

Ultimately, building this discipline lets you anticipate market shifts and personalize experiences at scale; sustain performance by monitoring model drift, running controlled tests, and aligning analytics with business objectives and privacy obligations so your predictions consistently drive better customer engagement and measurable growth.

FAQ

Q: What data sources should I use to analyze and predict online consumer trends?

A: Combine first-party data (website and app analytics, CRM and transaction logs, email engagement, in-app behavior), second-party or partner feeds (cohort data, cross-platform user joins), third-party datasets (demographics, market panels, ad platform signals), public indicators (search trends, social listening, economic statistics) and qualitative inputs (surveys, reviews, customer support transcripts). Ensure each source includes timestamps, user or session identifiers for stitching, and metadata about acquisition channel and device to enable segmentation and time-series analysis.

Q: How should I clean and prepare data before analysis and modeling?

A : Standardiser les identifiants et les horodatages, supprimer les doublons et le trafic généré par des robots, gérer les valeurs manquantes par imputation ou marquage approprié au domaine, normaliser et encoder les variables catégorielles, segmenter les flux d'événements en sessions, créer des variables dérivées (récence, fréquence, montant, heure, indicateurs de cohorte), agréger les données à la granularité temporelle adéquate et masquer ou supprimer les données personnelles identifiables (DPI) pour des raisons de conformité. Inclure des contrôles de qualité des données, des stratégies d'échantillonnage pour les grands ensembles de données et un pipeline ETL reproductible qui enregistre les transformations et les versions de schéma.

Q : Quelles méthodes analytiques et prédictives sont les plus performantes pour prévoir les tendances de consommation ?

A : Utilisez une approche mixte : modèles de séries temporelles (ARIMA/SARIMAX, Prophet) et méthodes d’espace d’état pour les prévisions de référence ; arbres de décision à gradient boosté (XGBoost, LightGBM) ou forêts aléatoires pour les prédictions tabulaires ; modèles de séquences (LSTM, variantes de Transformer) pour la prévision du parcours utilisateur et des séquences de sessions ; clustering et segmentation pour les cohortes comportementales ; extraction de règles d’association pour l’analyse de paniers ; modèles d’uplift et causaux pour estimer les effets des traitements. Combinez les approches au sein d’ensembles et privilégiez les outils d’explicabilité (SHAP, dépendance partielle) pour interpréter les facteurs à l’origine des tendances prédites.

Q : Comment valider et évaluer les modèles prédictifs du comportement des consommateurs en ligne ?

A : Utilisez une répartition temporelle des ensembles d'entraînement, de validation et de test, ainsi qu'une validation croisée à origine glissante pour assurer la stabilité temporelle. Mesurez les performances à l'aide de métriques appropriées : RMSE/MAE/MAPE pour les prévisions continues, précision/rappel/AUC pour la classification, et des indicateurs clés de performance métier tels que l'augmentation du taux de conversion ou le revenu par utilisateur pour évaluer l'impact. Testez les modèles sur des événements historiques, effectuez des tests de robustesse face à la saisonnalité et aux jours fériés, calibrez les résultats probabilistes, surveillez la dérive des données et du modèle en production et validez les affirmations causales par des expériences randomisées ou des plans quasi-expérimentaux lorsque cela est possible.

Q : Comment puis-je mettre en œuvre des analyses de données pour influencer les décisions en matière de marketing et de produits ?

A : Déployer les modèles dans les pipelines de production pour le scoring par lots ou l'inférence en temps réel, connecter les sorties à une plateforme de données clients ou à un système d'automatisation marketing pour personnaliser les messages et les offres, présenter les prédictions et les segments dans des tableaux de bord pour les décideurs, mettre en œuvre des déploiements basés sur l'expérimentation (tests A/B ou tests de validation) pour mesurer l'impact, établir des calendriers de réentraînement et de surveillance, appliquer une gouvernance pour la confidentialité et les risques liés aux modèles, et boucler la boucle en réintégrant les résultats post-déploiement dans les données d'entraînement pour améliorer continuellement les prédictions.